Detecting player position during a badminton rally

Overview:

Object detection models are generally trained on datasets with a large number of common categories of objects. Most common datasets for object detection are COCO and VOC. Since these datasets contain a large number of everyday objects, we can use models trained this way to infer object categories for real-life scenarios.

In this tutorial we would use person category to track person/persons during a badminton rally and would plot their positions on a standard badminton court-plane. This can be thought of as a first step in a sports-analytics pipeline which may also include pose-detection functionality to categorize different shots and hence able to generate automatic reports of a sport-person game-play. Fast models for purposes like object-detection, pose-estimation opens up a whole lot of opportunities even at amateur level.

We would be using Object-detection module for person-detection which has been trained on COCO dataset. Model accuracy is not state-of-art but its fast speed allows us to use this on any consumer CPU in the field. User can choose to use a different object-detection model for the sake of tutorial.

import numpy as np

import cv2

import matplotlib.pyplot as plt

cap = cv2.VideoCapture("./tty_sindhu.mp4")

ret,frame = cap.read()

Transformation:

Since we would like to display a sports person's movements on a standard court given a video of person's gameplay, we would need to transform the coordinates from one frame( source) to another frame( destination).

We can assume that such a solution( matrix ) exists , that would transform the source coordinates to the destination coordinates. OR if not, we can use something like least-squares to iteratively reach a solution that is good enough for our case.

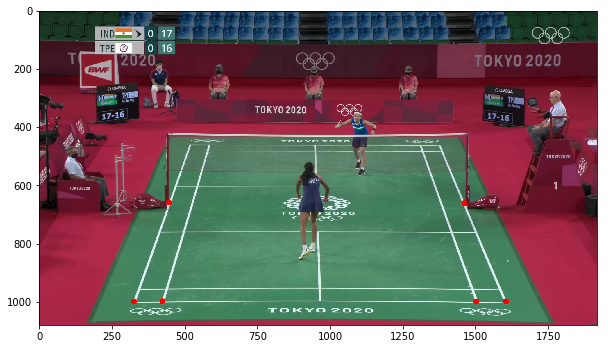

Source Coordinates:

Source coordinates would refer to the corners of the court being shown for the current video.

We would be selecting 6 bottom-half markings of badminton-court as source-coordinates

#In format (x,y),top left is considered ORIGIN (0,0)

DOUBLE_BOTTOM_LEFT_SRC = [326,999]

DOUBLE_TOP_LEFT_SRC = [447,658]

DOUBLE_BOTTOM_RIGHT_SRC = [1606,998]

DOUBLE_TOP_RIGHT_SRC = [1464,660]

SINGLE_BOTTOM_LEFT_SRC = [424,998]

SINGLE_BOTTOM_RIGHT_SRC = [1504,998]

src_coords = np.hstack((DOUBLE_BOTTOM_LEFT_SRC,DOUBLE_TOP_LEFT_SRC,DOUBLE_BOTTOM_RIGHT_SRC,DOUBLE_TOP_RIGHT_SRC,SINGLE_BOTTOM_LEFT_SRC,

SINGLE_BOTTOM_RIGHT_SRC)).reshape(6,2)

src_coords_final = np.hstack((src_coords,np.ones((src_coords.shape[0],1)))).astype("float32")

for (x,y) in src_coords:

cv2.circle(frame,(int(x),int(y)),radius=10,color=(0,0,255),thickness=-1)

plt.figure(figsize=(10,10))

plt.imshow(frame[:,:,::-1])

plt.show()

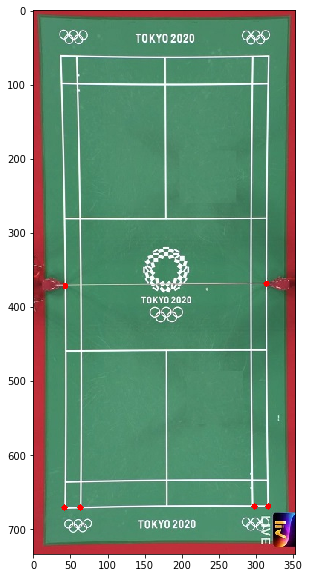

Destination coords: This would generally be referring to the coordinates on a STANDARD badminton court frame as show below.

court = cv2.imread("./court_0.jpg")

DOUBLE_BOTTOM_LEFT_DST = [42,671]

DOUBLE_TOP_LEFT_DST = [43,372]

DOUBLE_BOTTOM_RIGHT_DST = [316,669]

DOUBLE_TOP_RIGHT_DST = [314,369]

SINGLE_BOTTOM_LEFT_DST = [63,671]

SINGLE_BOTTOM_RIGHT_DST = [298,669]

dst_coords = np.hstack((DOUBLE_BOTTOM_LEFT_DST,DOUBLE_TOP_LEFT_DST,DOUBLE_BOTTOM_RIGHT_DST,DOUBLE_TOP_RIGHT_DST,SINGLE_BOTTOM_LEFT_DST,

SINGLE_BOTTOM_RIGHT_DST)).reshape(6,2).astype("float32")

for (x,y) in dst_coords:

cv2.circle(court,(int(x),int(y)),radius=4,color=(0,0,255),thickness=-1)

plt.figure(figsize=(10,10))

plt.imshow(court[:,:,::-1])

plt.show()

We would try to find transformation-matrix using least squares (i.e trying to minimize the square of distance b/w transformed and src coordinates). We would be using numpy.linalg.lstsq.

from numpy.linalg import lstsq

M_transform = lstsq(src_coords_final[:3,:],dst_coords[:3,:],rcond=-1)[0]

print(M_transform)

[[ 2.1411957e-01 -8.7771768e-04]

[ 7.3045358e-02 8.7652141e-01]

[-1.0077529e+02 -2.0435873e+02]]

np.matmul(src_coords_final,M_transform)

array([[ 41.999992, 671. ],

[ 43.000008, 372.00003 ],

[316. , 669. ],

[260.90567 , 372.86044 ],

[ 62.910667, 670.0375 ],

[294.1598 , 669.0896 ]], dtype=float32)

dst_coords

array([[ 42., 671.],

[ 43., 372.],

[316., 669.],

[314., 369.],

[ 63., 671.],

[298., 669.]], dtype=float32)

We got ourselves a matrix for the purposes of transformation. We can now see how would it look on the full video.

For display purposes we would be using mid-point of bottom side of detected person rectangle.

import object_detection_python_module as od #import the module

od.load_model("./objectdetection.bin") #initialize the model and load weights.

court = cv2.imread("./court_0.jpg")

cap = cv2.VideoCapture("./tty_sindhu.mp4")

frame,ret = cap.read()

COURT_H = court.shape[0]

COURT_W = court.shape[1]

while (ret == True):

predictions = od.detect_object(frame,conf_threshold=0.9,class_index=0) #class_index = 0 --> person category

num_persons_detected = predictions.shape[0]

#selecting only top/single person for display-purposes.

if num_persons_detected > 0:

left,top,right,bottom,confidence,class_id = predictions[0]

x = (left + right)/2

y = bottom

t_coordinates = np.matmul(np.array([[x,y,1]]).astype("float32"),M_transform)

x_t = t_coordinates[0,0]

y_t = t_coordinates[0,1]

x_t = min(max(0,x_t),COURT_W-1)

y_t = min(max(0,y_t),COURT_H-1)

cv2.rectangle(frame,(int(left),int(top)),(int(right),int(bottom)),(0,255,0),1)

cv2.circle(court,(int(x_t),int(y_t)),radius=6,color=(255,0,0),thickness=-1)

frame[0:COURT_H,0:COURT_W,0:3] = court #overlay court pixels on to the frame.

cv2.imshow("frame",frame)

if cv2.waitKey(1) == -1:

ret,frame = cap.read()

continue

else:

break

Final Result:

The resulting video shown below runs end to end at 26 fps ( including time taken to render video ) on my intel i7 quad-core cpu.

from IPython.display import HTML

HTML("""

<div align="middle">

<video width="80%" controls>

<source src="./tutorial_badminton_track_files/badminton_2.mp4" type="video/mp4">

</video></div>""")

Remarks:

In this case, we manually annotated the corners in the first-frame of badminton-rally video, to get a transformation(linear) matrix. Since badminton-courts have clear markings, we can try to detect corners/perimeter to get a set of source coordinates using off-the-shelf edge detectors Or using fancy( neural-network) based detectors. In case of amateur courts , some calibration protocol can be established to register corners.

For more deep literature about automatic full-analysis of badminton sport , check out https://cvit.iiit.ac.in/research/projects/cvit-projects/badminton-analytics.

Resources:

Original Video. https://www.youtube.com/watch?v=W4EGrNeFlys

API used: